- Shadow AI

- Posts

- 🦾 Shadow AI - 11 April 2024

🦾 Shadow AI - 11 April 2024

Arming Security and IT Leaders for the Future

Forwarded this newsletter? Sign up for Shadow AI here.

Hello,

And welcome to this week’s edition (#31!) of Shadow AI.

This week, I share a blog post I wrote for Board Cybersecurity with more research I’ve done on how companies and their cybersecurity teams are managing AI risk per their Annual Reports. Board Cybersecurity is a website that helps board directors, executive management and investors properly assess, manage and mitigate cybersecurity risk and I’m excited to be helping them build out their AI content.

I also cover:

🔒 LLMs for Cybersecurity Tasks

🔺🔺🔺 Crescendo Attacks in LLMs

🏛️ Senate AI Report on the Horizon

👀 AI-Powered Social Media Monitoring

🧯 AI Security Command Center

💼 5 Cool AI Security Jobs of the Week

Let’s dive in!

Demystifying AI - Aligning Cybersecurity Strategies with the Rise of Generative AI in Business

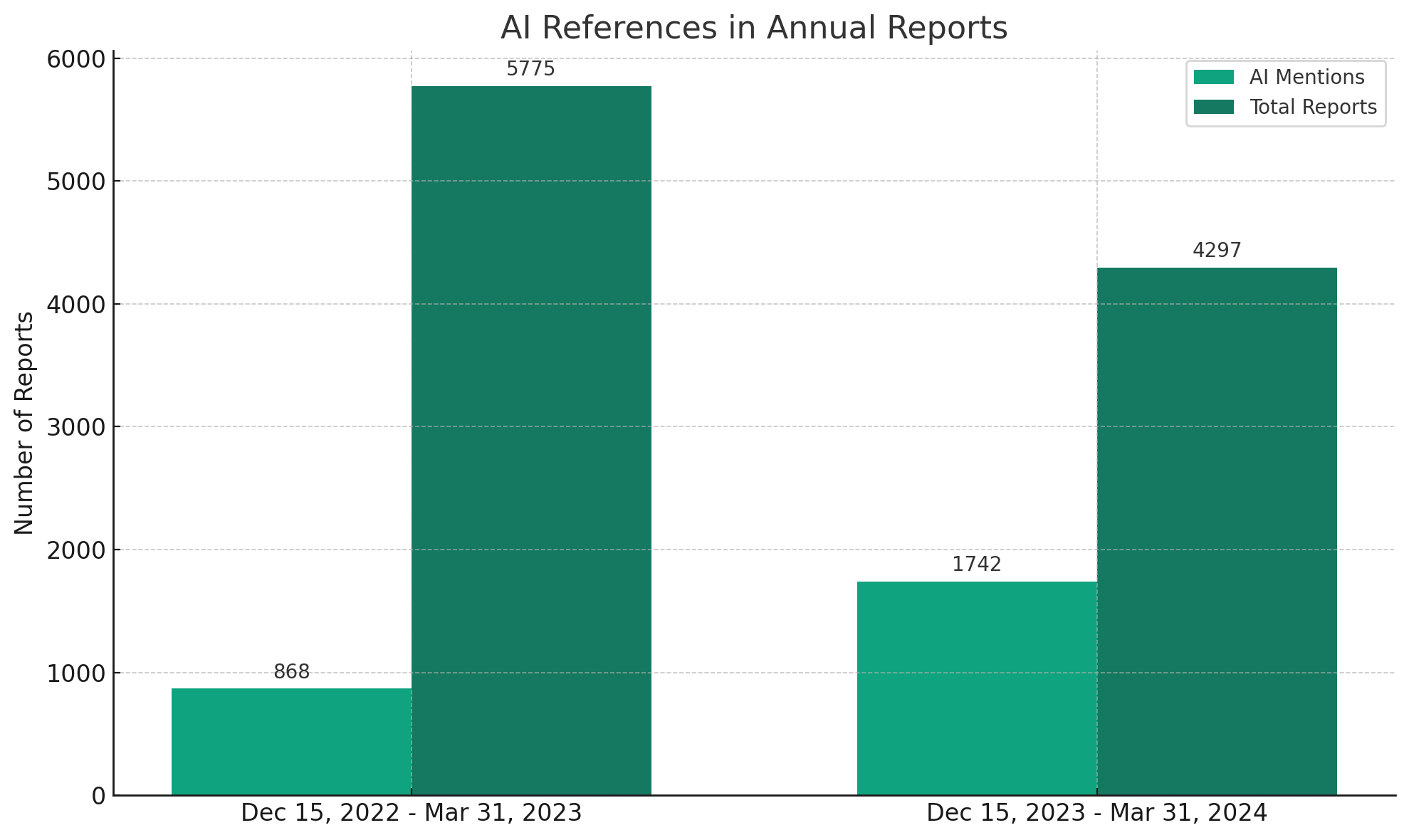

The presence of Generative Artificial Intelligence (AI) in the business world has been unmistakably marked by its burgeoning references in public company Annual 10-K Reports. A comparative analysis from December 15, 2022, to March 31, 2023, against the same period from December 15, 2023, to March 31, 2024, reveals a significant jump—from 15% to 40%—in companies mentioning AI in their Annual 10-K Reports.

This notable increase in AI references within the latest 10-K filings predominantly centers on two aspects:

The competitive necessity to effectively incorporate AI into product offerings

The multifaceted risks AI introduces, spanning legal, business, operational, and security domains.

The filings collectively underscore an ongoing battle between leveraging AI for market advantages and the need for its secure implementation. However, the details provided in the newly required Item 1C cybersecurity disclosures remain sparse on how companies are mitigating AI-induced cybersecurity risks.

Is there a Strategic Misalignment between Business Goals and Cybersecurity Efforts?

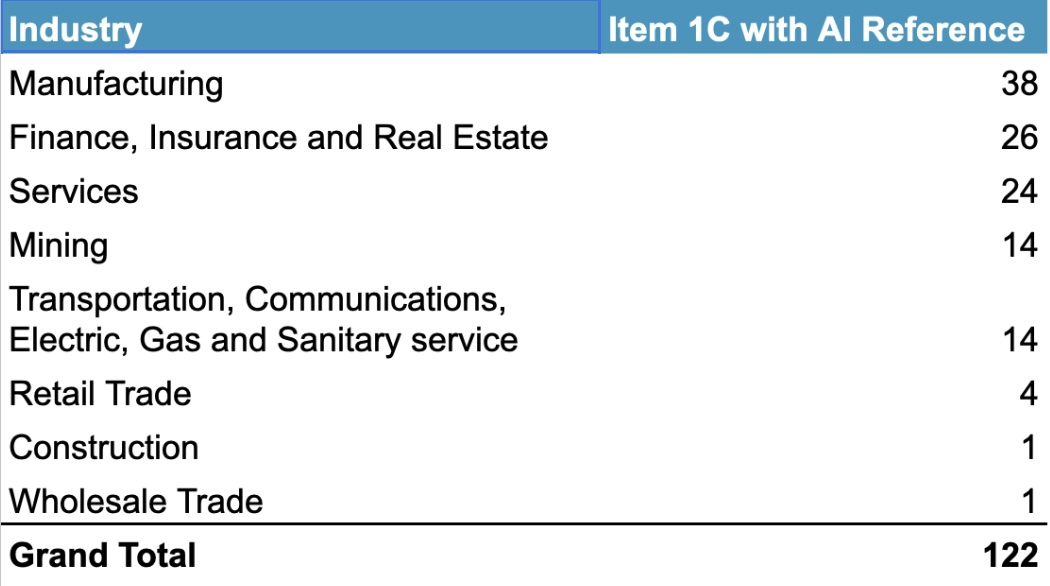

Although 1,742 10-K filings identify AI as either a pivotal business concern or risk factor, only 122 10-K filings explicitly mention AI within their Item 1C cybersecurity disclosure section.

These 122 filings address AI’s role in cybersecurity through one of four narratives:

Showcasing cybersecurity measures that employ AI for enhanced threat identification and mitigation.

Recognizing AI as a novel risk vector that may amplify vulnerabilities in information systems.

Demonstrating leadership’s competency in AI-related fields.

Adapting cybersecurity governance frameworks to include standards like the NIST AI Risk Management Framework.

The bulk of AI-related cybersecurity disclosures tend to align with the first three approaches, with only a handful of companies, including a prominent semiconductor firm, citing the NIST AI Risk Management Framework as part of their cybersecurity governance.

A standout example involves a $40 billion energy company that emphasized its Artificial Intelligence Steering Committee within Item 1C. This committee is tasked with steering the strategic direction, supervision, and advisory for AI initiatives, ensuring they align with the company’s objectives, ethical standards, security protocols, and industry best practices to foster innovation and maintain competitive edge. Additionally, it regularly updates the Audit Committee on its progress.

Industry Insights

The industries most frequently mentioning AI in their Item 1C cybersecurity disclosures are:

Manufacturing

Finance, Insurance, and Real Estate

Services

The distribution of AI mentions across various sectors reveals an interesting pattern, especially the underrepresentation of technology firms, which are at the forefront of AI adoption, in cybersecurity risk disclosures.

The Implications for Cybersecurity

The hallmark of a robust cybersecurity program is its ability to bolster business objectives. Yet, the data suggests a potential disconnect between perceived top business risks and the strategic response of cybersecurity risk management programs.

In future Annual 10-K Reports, companies have a significant opportunity to more effectively communicate how their cybersecurity risk strategies are aligned with their AI ambitions, and the evolving landscape of AI threats, to the investor community.

AI News to Know

LLMs for Cybersecurity Tasks: Last week in Shadow AI, we explored ways LLMs can redefine security teams in the future. This week, Carnegie Mellon University’s Software Engineering Institute and OpenAI published a white paper on how LLMs can be an asset for cybersecurity teams if evaluated properly. When evaluating LLMs, security professionals should consider the following:

Comprehensive Evaluations are Needed: Assessing cybersecurity expertise requires going beyond simple fact-based tests that play to the strengths of LLMs. Evaluations need to assess practical and applied knowledge by testing LLMs on realistic, complex cybersecurity tasks that require strategic thinking, risk assessment, and the ability to adapt to changing circumstances.

Three-Tiered Evaluation Approach: The paper recommends a three-part evaluation framework:

a. Theoretical Knowledge Evaluation: Assesses the LLM's understanding of core cybersecurity concepts and terminology.

b. Practical Knowledge Evaluation: Tests the LLM's ability to provide solutions to specific cybersecurity problems.

c. Applied Knowledge Evaluation: Evaluates the LLM's capability to achieve high-level objectives in open-ended cybersecurity scenarios.

Practical Challenges in Evaluation: Creating a sufficiently large and diverse set of evaluation questions/tasks, administering interactive evaluations, and defining appropriate metrics pose significant practical challenges. Evaluators must be cautious about training data contamination and avoid overgeneralized claims about LLM capabilities.

Recommendations for Robust Evaluations: Conducting a rigorous, multifaceted evaluation should include:

a. Defining real-world cybersecurity tasks as the basis for evaluations, considering how humans perform these tasks.

b. Representing tasks appropriately, avoiding oversimplification while providing necessary infrastructure and affordances.

c. Making evaluations robust by using techniques like preregistration, input perturbation, and addressing training data contamination.

d. Framing evaluation results carefully, avoiding overconfident claims about capabilities and clarifying whether the evaluation is assessing risk or capabilities.

Crescendo Attacks in LLMs: Microsoft released a research paper on crescendo attacks that begin with a general prompt or question and then gradually escalates the dialogue by referencing the model’s replies, progressively leading a successful jailbreak of the LLM. In essence, LLMs can be social engineered to provide harmful or dangerous responses. Fine-tuning models with content specifically designed to trigger crescendo jailbreaks is one potential mitigation technique.

Senate AI Report on the Horizon: This fall, we reported on the AI Insight Forums Senate Majority Leader Chuck Schumer hosted covering a variety of topics from AI innovation to Copyright and IP to workforce and security implications. The Senate AI working group’s report is expected to come out in May to offer a framework for legislation. Building momentum for AI legislation in an election year, however, will be challenging.

AI on the Market

AI-Powered Social Media Monitoring: Alethea, which has built Artemis to use AI to detect, assess, and respond to disinformation risk, has raised $20M in Series B led by Google Ventures. Their technology is designed to help address influence operations like the campaigns we’re seeing from China to stoke social tensions in the US and Taiwan.

AI Security Command Center: StrikeReady raised $12M in Series A funding to redefine the way modern SOC teams operate with their AI Security Command Center. The platform leverages AI to streamline and automate routine SOC tasks, empower faster responses to threats, and reduce SOC tool sprawl.

💼 5 Cool AI Security Jobs of the Week 💼

Senior AI Security Engineer @ eBay to safeguard eBay’s AI projects | New York | $168k - $262k | 6+ yrs exp.

National AI Talent Surge @ US Government to help Uncle Sam responsibly leverage AI | Various Locations and Roles

Technical Program Manager - GenAI Security and Compliance @ DynamoFL to orchestrate feature development, prioritizing security, safety, and compliance, helping customers embed compliance throughout their AI stacks | Remote

Sr. Lead Security Engineer - AI/ML @ JPMorgan Chase to develop innovative and secure AI capabilities | Columbus, OH or Tampa, FL

Customer Advocacy Manager @ Vectra AI to drive customer references and maintain lead ranking on Gartner Peer Insights | San Jose, CA | $60k-$72k | Entry Level

If you enjoyed this newsletter and know someone else who might like Shadow AI, please share it!

Until next Thursday, humans.

-Andrew Heighington